Optopoculus Max - Spring 2016 Seattle VR Hackathon

Everyone should feel the joy of playing music. Technology can help.

What it does

Optopoculus Max is a VR experience that shuttles you through four musical interfaces in the duration of a song. As each song part changes, the scene evolves and a user is presented with a new interaction. All interactions are designed to have expression while fitting into constraints of music theory that allow it to sound good with the backtrack.

These design choices and music theory assumptions give the end user a safe path to performing multiple types of instruments, including synthesizers, samplers, percussion, and microtonal effects.

How It Was Built

Our team made the adventurous choice of constructing a VR world in a new progamming environment - and learning as we built. We utilized a dataflow programming language by Cycling74's Max with OpenGL for visuals. Max is traditionally a signal processing environment for audio and video. The music was originally composed in Ableton Live with virtual instruments and drum machines.

Challenges

Bringing 3D meshes into this environment was very difficult. It's good at using existing primitives and generating derivative visualizations, however, traditional animation was not as accessible for us as past experiences with Unity3D. It was challenging to navigate the balance between presenting a strong narrative experience and providing modern instruments for the user to explore their own creativity.

Achievements

- stong narrative with good pacing

- intuitive and fun interactions

- musically expressive interactions

- thought-provoking musical interaction

- team was brutally focused on priorities for the deliverable

What we Learned

- its good to fail early (learn and move on)

- top-down design, bottom-up implementation

- need to establish and follow patterns for managing state in max/msp

- max/msp does not play well with text-diffing (git) -- I really miss branching and merging patterns on collaborative projects

- there is such a thing as too much pizza

What I Did

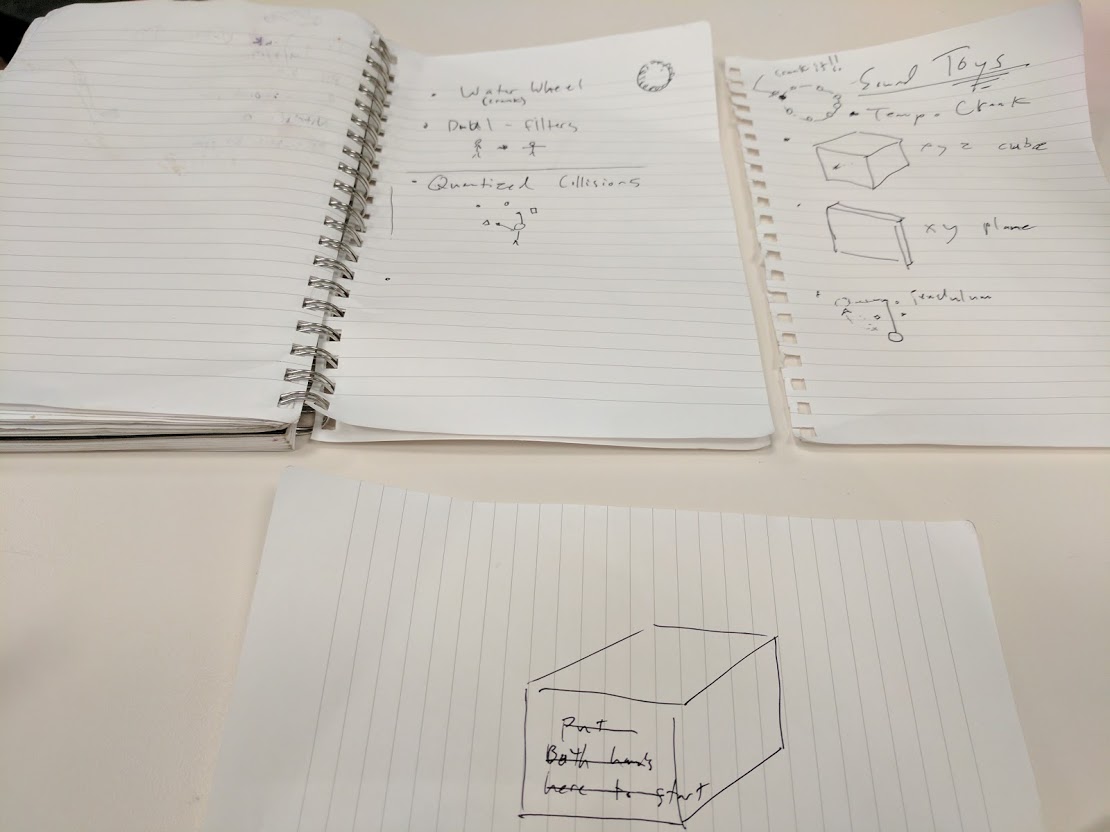

I recruited this team and founded the project. It was my direction to choose Max as a development environment. My other contributions included: programming scene sequence, U.I., sound design for one of the instrument scenes and audio sampler trigger playback, overall storyboard and interaction design experience.

What's Next?

- record/playback gestures to buffer

- more gestural tracking and guidance

- deeper interface with tonal elements

- multiplayer and distance jamming